DeltaVR Improvements

Ranno Samuel Adson

DetavVR is a virtual reality application where people walk around a virtual copy of the University of Tartu's Delta educational Building. It has different interactions for the players along with multiplayer capability. DeltaVR's function is to introduce one to the different activities happening in the real Building and to be a showcase of the work made by students. It is shown off in many expos and events that the University of Tartu takes part in.

The current tasks tend to differ with each milestone. The general lean is toward adding and fixing multiplayer features. So assets that can change their state and supporting multiplayer in general. This is mainly to challenge myself, for I've been avoiding configuring multiplayer as it is new ground to work on and tedious to test. Other outliers may appear depending on player feedback.

Possible features to be added are:

1) Redesign the UI to improve the experience for presenters.

2) Redesign player location calculations to improve immersion.

3) Add grabbable objects with gravity (such as monitors).

4) Fix VR doors to improve immersion.

5) Add interactable objects to mirror real life, such as storage cabinets and trashcans with flaps.

In the end there should be a link to the final build, repo and a 10-20 sec final result video.

Milestone 1 (07.10)

For this iteration, I will improve the UI used to manage the game. Since it's a multiplayer game, setting it up can be confusing because it is complex under the hood. Currently, the presenter has to start the server, start advertising it online, start listening for it online and then join. This is a relic from a time when DeltaVR was developed by another. I made a solution a while ago, but it somehow got corrupted. In this iteration, I will remake the UI and add additional capabilities to it.

Tasks

1) Design the UI layout (1h)

2) Have the game start with 1-2 button presses (2h)

3) Add quit button (1h)

4) Configure multiplayer server joining (4h)

5) Test the UI (1h)

[Current menu]

For one, DeltaVR has no quit button, with Alt-F4 being the main way to close it. I also hope to add a button that resets the player tutorial functions to the start state. This is secondary, but a thing that could be done. This improvement is important because it removes the barriers for the presenters to present. If they can easily manage the application, they tend to prefer presenting it. If the game doesn't get presented, it's not fulfilling its function.

Results

Tasks

1) Design the UI layout (1h -> 2h)

2) Have the game start with 1-2 button presses (2h -> 1h)

3) Add quit button (1h -> 0.5h)

4) Configure multiplayer server joining (4h -> 4h)

5) Test the UI (1h -> 2h)

Designing the UI layout was not complex. In the beginning, I had hoped to add a restart button that would simplify showing the application in expos. More than half of the time went to designing the restart button with its icon and working on its colours. I spent hours trying to implement it, but since it's a multiplayer application, it turned out to be even more complicated than I had feared. I currently have it disabled, which is fine, because it wasn't a hard goal established, and thus, the time spent on its functionality is not counted.

Having the game start in singleplayer was not complex, but it took a while to worm out the bug that made it dysfunctional. I also spent an hour or three getting the VR-keyboard options working properly, but, since I did not establish it as a goal, it is not officially under time spent.

The quit button was the simplest. Straightforward and quick. However, it did confuse me for a bit. You can use the quit button in the build, which was the goal, but in Unity editor it would need another way to do it. It would be simple, but I thought it ultimately unnecessary (You already have a quit button in there). Adding it would have added unneeded complexity.

Multiplayer joining was a proper headache. After a lot of investigation, it turned out that the processes checking for available servers were running on background threads instead of the main thread. It is problematic because only the main thread can manage the objects in the scene and has default access to the usual methods. It was so difficult to puzzle out, because instead of a warning, console log or anything. If you try to do something on the background threads that you can't, then the processor just has a stroke and halts the method. Nothing on the console. I was able to switch the execution to a main thread after some fiddling.

The UI did not actually need 2 hours of testing. However, I took part in 2 expos showcasing my application, where I got extensive testing done as a side benefit.

In total, I was off with the time estimates by half an hour. Instead of taking 9 hours, it took 9.5 hours.

[Updated menu]

Milestone 2 (21.10)

This milestone has the general theme of player colliders. To put it shortly, there are 2 general issues, both decreasing player immersion and game enjoyment:

The player character's main collider (see green capsule) can be offset from their perceived location, dictated by the camera. This happens when the player moves around freely in the real world. This is not an issue, for such movement makes the application much more immersive, and the player collider shouldn't prevent such movement in any way. With teleporting around, the player's main collider is not reset to the camera position. This is an issue because this collider is used to detect the player's position, which can result in teleporting portals not detecting when the player tries to use them (see figure below, green box is the portal's detection area).

[Player main collider issue]

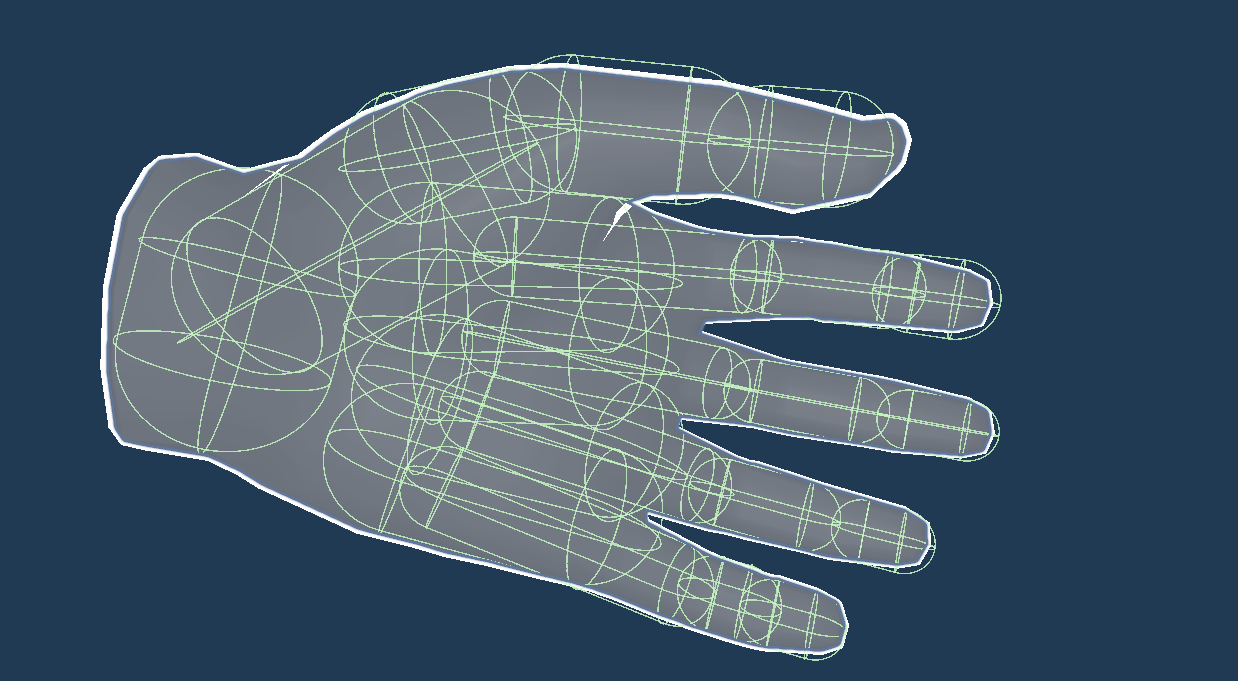

Another issue is the player's hand collision. Currently, the player's hand just goes through any object, decreasing immersion. Fixing this is difficult because the solution shouldn't interfere with the ability to grab items, and the solution needs a dynamic way to manage the resulting offset between the real and VR hands.

[Player hand collider issue]

Tasks

1) Make the player's main collider readjust when teleporting, OR add and configure a separate collider trigger tracking camera movement so no offset is perceived. (4h)

2) Make dynamic, colliding VR hands to increase immersion (8h)

Results

Tasks

1) Add and configure a separate collider trigger tracking camera movement so no offset is perceived. (4h -> 2,5h)

2) Make dynamic, colliding VR hands to increase immersion (8h -> 8h)

I had to add a new collider to the camera instead of having the teleportation readjust the player collider. The solution is better in a way where the player detection is happening where the player actually percieves themselves to be. The collider is a simple trigger that could be used by other components.

The downside to that approach is that some scripts have to be rewritten to detect the new collider. Also, the original disparity still exists. I tried to have the main movement type (ray teleportation) adjust fix the disparity upon teleport, but its functionalities are part of a plugin. When I tried to create custom scripts to emulate the behaviour, they stopped working properly. The reason for that is, that the scripts in the plugin are heavily intertwined and cannot be edited in isolation.

[Player new position detection focused on camera placement]

Hand collisions were easier in the places I expected to be hard and harder in the places I expected to be easy. The displaying and synchronising of the elements in multiplayer were surprisingly easy. However, there were controllers in the hands of the players that did not easily move along with the hands.

[Colliding dynamic player hand prototype]

Milestone 3 (04.11)

Tasks

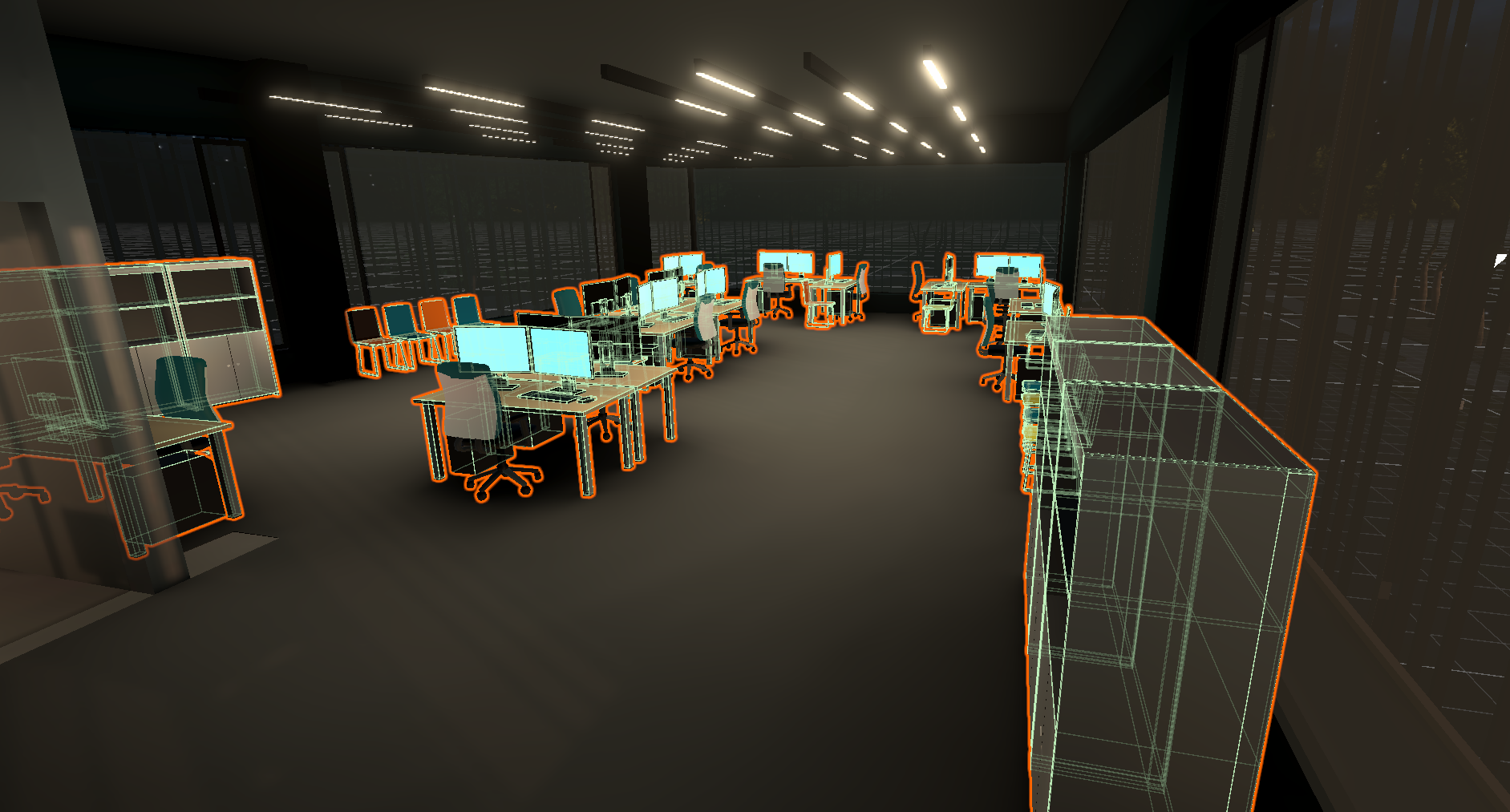

1) Improve the collisions in the scene to more properly mirror the actual dimensions of the objects. This is mostly an issue with furniture. (8h)

2) Improve the current VR project hand colliders, having the controllers not visibly pierce rigid objects. (8h)

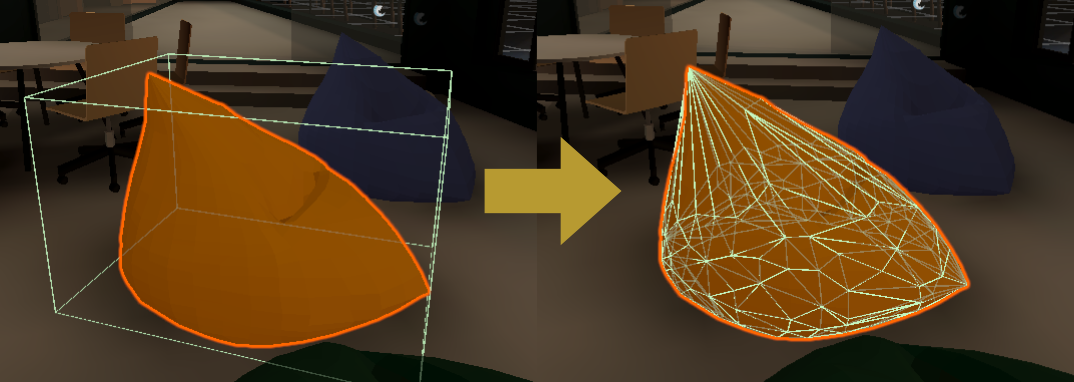

[Model colliders rework concept]

Results

Tasks

1) Improve the collisions in the scene to more properly mirror the actual dimensions of the objects (8h -> 4h)

2) Improve the current VR project hand colliders, having the controllers not visibly pierce rigid objects. (8h -> 4.5h)

The collisions were straightforward, but there were a lot of them. Whenever possible, I replaced singular box colliders with an array of primitive colliders to mimic the mesh shape. In some cases, Such as beanbag chairs, I had to use more expensive mesh colliders that are designed to mimic the shape of the object. Whenever possible, I had the mesh collider be convex to reduce the load of the system. This increased rendering load was offset by simplifying the colliders of objects the player would never interact with.

[Model colliders rework results]

NOTE: Non-convex mesh colliders do not show green lines

Making the VR controller attached to the hand at all times was quite straightforward. Eventually, I found the script that initiates the controller prefab. I had the script set the controller prefab under the hand mesh object. Then I configured the hand to be an updated prefab with detailed colliders instead of the default one. After that, I configured the colliders to properly account for hand animations.

[Player hand collision with world objects]

[Player hand collision internal structure]

Milestone 4 (18.11)

Tasks

1) Have interactable and movable objects synchronise in DeltaVR multiplayer. (10h)

DeltaVR is a multiplayer game. It is rarely used that way, for it is difficult to demo to multiple people at once. So, over time, when more movable and interactable elements were added, they were not designed to be synchronised over multiplayer. Such implementation was foreign to the developer, and it was tedious to test. So, there are elements that look one way to one player and another way to the other. These elements aren't shared when they should be. These elements are the following:

1) Elevators that go between 2 floors.

[Elevator network problem]

[Self-driving car network problem]

2) A self-driving car in the courtyard that stops when a player stands in front of it.

Such solutions already exist for elements such as the bow game, drawing interaction and doors. There are 2 other solutions that need to be synchronised as well, but they are not that central to the experience.

Results

Tasks

1) Have interactable and movable objects synchronise in DeltaVR multiplayer. (10h -> 8h)

In DeltaVR, multiplayer is handled by FishNet network manager plugin. How it works essentially is that each player has their own instance running, but certain objects with the Network Object script communicate their state to other instances. It is a bit more complex than that, but this is the basics. The synchronisation of the car and the elevator couldn't be achieved by simply making them network objects. There seemed to be 2 different issues.

1) When a network object detects a collider event, let's say a player walks in front of the self-driving car, this isn't broadcast to all network objects. This can result in one network object reacting to the collider while its counterparts react as if nothing happened. So, the players see as if the same object is in different states.

2) Even if the first problem is solved, the elevator works by running multiple background processes (coroutines) one after another. This is useful for smooth movements of objects such as elevator doors. However, these processes are somehow not synchronised, activating seconds apart. This is noticeable in the elevator, where players can seemingly go through the floor of a stationary elevator.

The solution to these problems lies in utilising the player's display avatar. This is a network object that tracks the player's position and movements, displaying it for all to see. If you add a collider to it, you have a good way to simultaneously display the player's position to all instances of any object. Since both the elevator and the self-driving car rely solely on the player's position to determine their state, you can have them looking for the player's display avatar. In that case, all instances get identical synchronised input and thus display identical synchronised output.

[Self-driving car network results]

[Elevator network results]

[Player display avatar]

The elevator and the self-driving car will remain network objects so that their initial states are synchronised, for players can join and leave the server whenever they want to, regardless of other players.

Milestone 5 (02.12)

Tasks

1) Have the elevator buttons work as in real life. (10h)

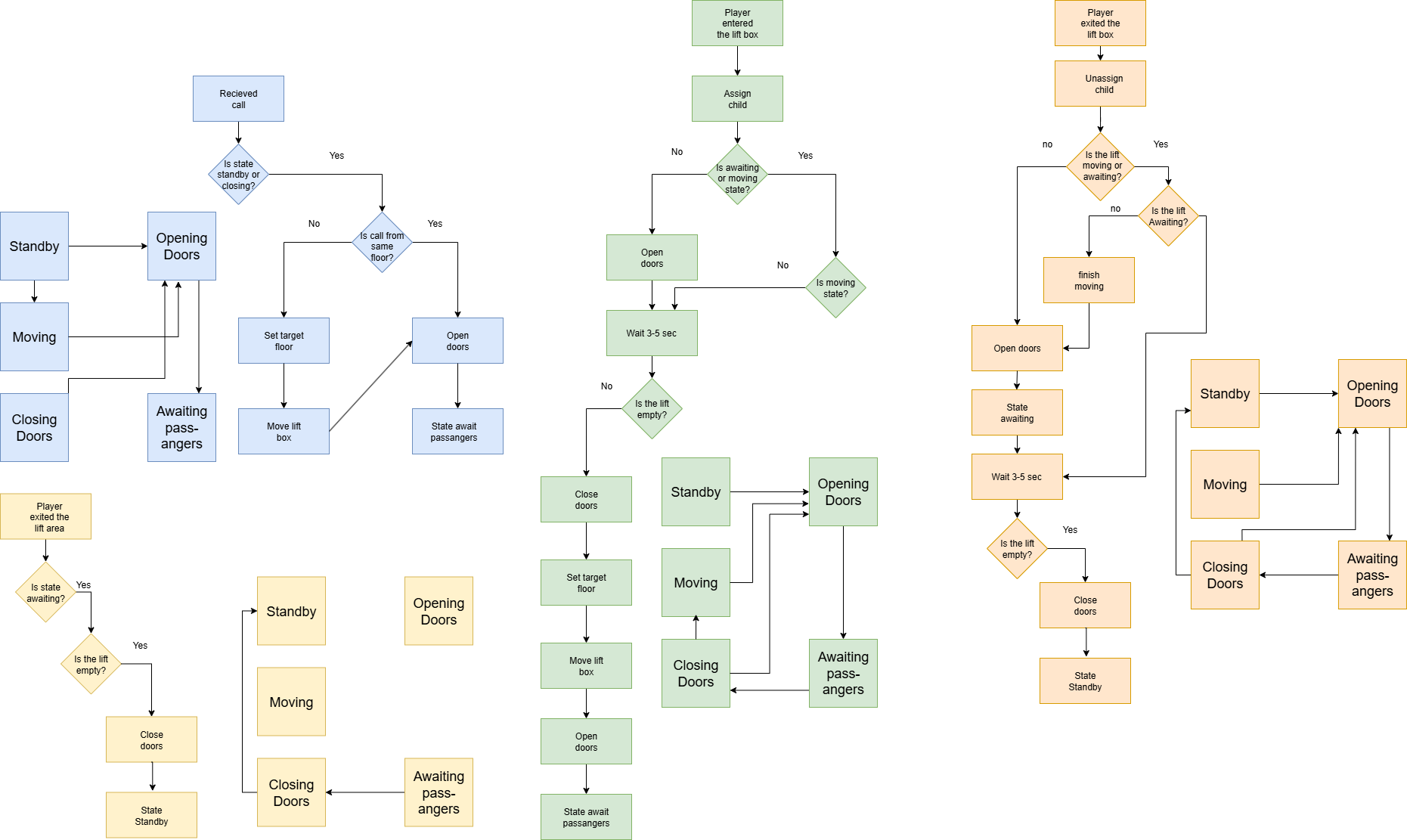

As discussed before, the elevator works by detecting the player's position. When the player gets close to the elevator, it moves to the player's floor and opens the doors. If the player enters the elevator, it closes doors, moves to the floor it's not currently on (it can only move between floors 1 and 2 for now) and opens doors. The real inner workings are much more complex; see the elevator state diagram for a simplified overview. The problem with this approach is two-fold.

[Current elevator state diagram (simplyfied)]

Firstly, the detector determines if it should react to the player approaching the elevator only if it's ready to start taking on passengers. The second is that even though the elevator has all the buttons and pieces that a real elevator has, it is purely decorative. User testing has determined that people want to use the buttons themselves instead of being automatically transported.

[Elevator inner buttons]

Instead of solving the first problem by creating a custom complex priority system, the elevator could instead work just as the real elevator, relying on the player to push the buttons they want. This can improve immersion and have the elevator work according to time-tested algorithms without having to address countless edge cases. This will allow the elevator to work on more than 2 floors in the future if additional floors are made accessible to players.

This solution is possible because of the earlier hand-collider improvements in milestone 3, where now individual fingers can be detected by objects, granting the needed accuracy to push smaller buttons.

So to have this task count as completed, the player can push buttons to:

1) Summon the elevator from another floor.

2) Have the elevator open doors from the outside.

3) Open and close elevator doors from inside with open-close buttons while the elevator is not moving.

4) Push a floor button and have the elevator travel to the corresponding floor if accessible.

Additional non-obligatory objectives are:

1) Have the pushed button change colour to signify a registered push.

2) Add button pushing sounds to all buttons.

3) Have an arrow projection inside the elevator showing what way the elevator is moving.

4) Add feedback for players if they press non-accessible floor buttons.

5) Add an interesting reaction to the player pressing the alarm bell button.

6) Have the player's fingers animate specifically to push buttons. This means the hands should have a finger pointing towards the closest buttons in some cases.

[Button pushing example]

Results

Tasks

1) Have the elevator buttons work as in real life. (10h -> 17h)

The iteration was a success, but it has a minor bug and some unimplemented optional functionalities. You can call the elevator for both the current floor and the other floor. You can open/close doors from the inside, and you can pick a viable floor, and it will take you there. For optional objectives, the button currently active lights up orange (see gif) and stays so if its desired condition is not met. This is highly inspired by the actual button states in the real elevator. If the button is disabled, such as those for floors that are inaccessible, it will light up orange for half a second, giving player feedback.

[Open Elevator doors from outside]

[Summon elevator from another floor]

[Open and close elevator doors from inside]

[Transfer between floors with elevator]

The thing that made this iteration most difficult was having the buttons move in only one axis while having a "springiness" quality. Meaning if you push a button, it pops back up. I designed a proper clamping system that kept the button from moving in certain directions and limited its movement range. This, however, was overwritten by the sprigyness mechanic. Somehow, the Unity physics velocity can ignore Mathf.Clamp() up to some levels. I eventually managed the direction clamping by locking the rigidbody movements to only one axis and having the button collide with a backplate. This still leaves an issue where, in some situations, the button will momentarily pop forward to unreasonable amounts when it is stress tested.

Having the buttons call the needed functions properly took about 10 hours of work. The elevator is a complex state machine that needed stronger limits on what functions could be called as well.

There are 2 major possible improvements that should be made in the future, other than the unaccomplished additional objectives. First, the doors can't go between opening anc closing mid-movement. Meaning if the doors were closing, you would have to wait until they are fully closed before you can start to open them. This is to avoid 2 different coroutines trying to move the doors at the same time which doesn't break the interaction, but leads to the doors teleporting. This was not implemented because the sound effects for doors opening and closing require a dynamic cutoff sound design that was out of scope for this iteration. Also, where there are two elevators side-by-side (see figure below), the other buttons only call the left elevator. This doesn't break the interaction, but perhaps damages immersion.

[Two elevators side by side]

Milestone 6 (16.12)

Tasks

1) Have the player's hand lock onto the door handle when grabbing it. (4h)

2) Add automatic doorknob rotation motion when grabbing it. (3.5h)

3) At least partially solve the problem where the door is unpullable because the player is in the way. (2.5h)

Additional non-obligatory objectives are:

1) Add a sound to the doorknob turning to increase immersion.

2) Add a sound to the doors moving to increase immersion.

The problem with the grabbable doors in DeltaVR is best illustrated by the GIF below. First, when grabbing the door, the player's hand is not actually on the doorknob. This creates an instance where the player has difficulty opening the door. Not to mention the break in immersion. Also, in almost every case, the player can't pull the door because it can be stuck behind the player. The latter problem needs to be addressed, but it will require further research. For example, the door likely still shouldn't go through the player's face.

[DeltaVR door issue]

Results

Tasks

1) Have the player's hand lock onto the door handle when grabbing it. (4h -> 8h)

2) Add automatic doorknob rotation motion when grabbing it. (3.5h -> 2h)

3) At least partially solve the problem where the door is unpullable because the player is in the way. (2.5h -> 1h)

There turned out to be a lot of difficulties with locking the player's hand to the door handle. Eventually, an approach where the player's hand is hidden and replaced with a model that is locked to the door was chosen. Since grabbing is handled by an uneditable plugin script, you can't easily tell which hand did the grabbing. So there are custom colliders that detect which hand is close to the door handle and summon the proper hand model on the doorknob. When the player grabs the handle, their hand disappears, and the hand on the handle is summoned. Even so, there are still some issues with it. This is what I call "the ghost hand", where about 10-20% of the time, either the door hand isn't summoned when the player hand disappears, or the player hand doesn't disappear. A similar effect can happen when releasing the grip. This is definitely something to fix in the near future.

[DeltaVR door rework]

[Ghost hand issue]

Turning the door handle was rather straightforward. With some relearning of how animations work, it could be achieved. The problem with this was that the way the door handle was made, it had its centre point about a meter away from the actual object, so ordinary rotation was not an option. Turned out the easiest solution was to make a new door handle. This allowed me an opportunity to redesign the door handle as well, for it had a tiny air gap between it and the door itself. Also, its shape didn't resemble the door handles in the real Delta building. After some work in Blender, the new design was imported and configured. With this done, the door handle turning could be implemented (see above).

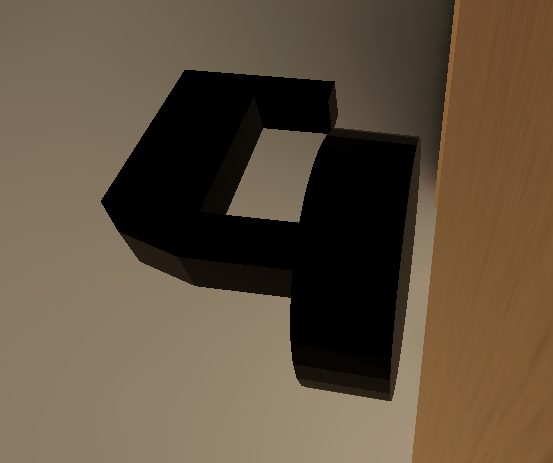

[Old DeltaVR door handle]

[Real Delta building door handle]

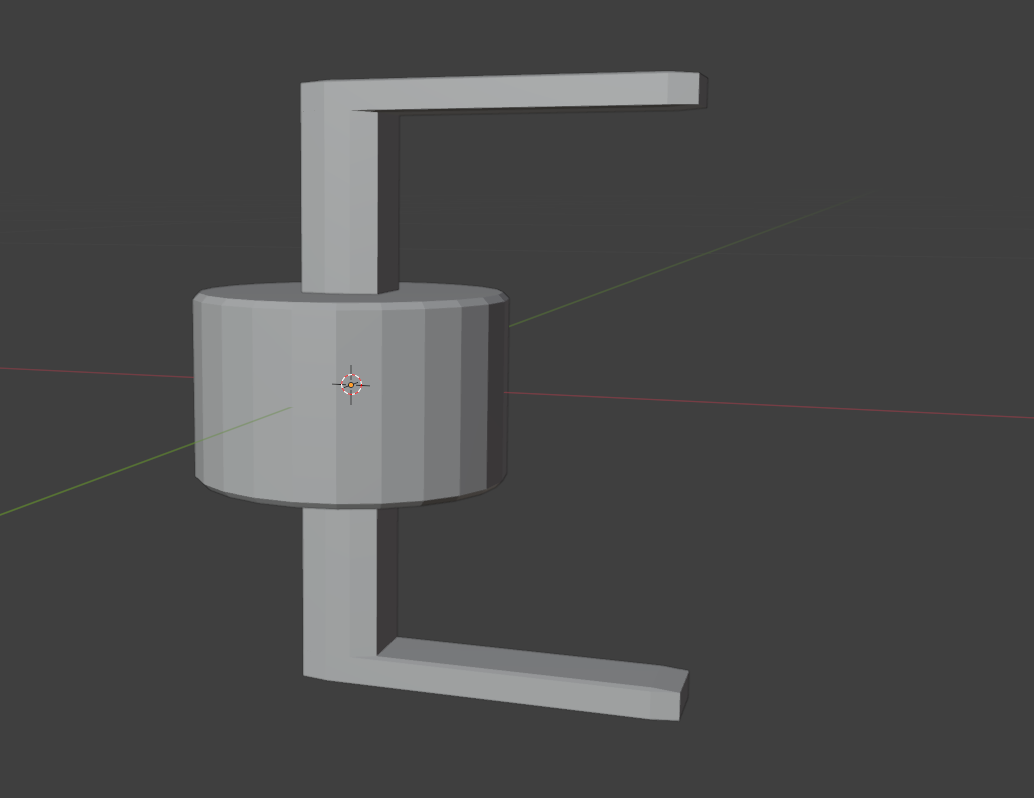

[Door handle rework in Blender]

[Door handle rework in DeltaVR]

Compared to other tasks the door getting stuck behind the player was solved rather easily. The player's capsule collider (see green capsule-shaped lines) object was placed on a custom layer that ignored door object colliders. This should address a lot of the confusion.

[Player collider solution]

With this milestone, a lot of potential improvements to the door have come to mind. First, the door movement being disproportionate to the actual player hand movement turns out to be an issue with GrabComponent plugin script. To be short, when grabbing the door, it assumes the door is being grabbed from it centre, not off to the side where the handle collider detects the grab. This isn't an issue with things you can grab and carry, but can become apparent when hinge joint is also affecting the grabbed object. I tried to solve this with a custom door that has its centre where the handle is, but this resulted in glichy, randomly teleporting doors. This was caused by hinge joint. Sadly, I did not have time to solve this issue. I could have had the approach where the handle was the grabbable object but I wasn't sure how to stick it to its parent door object. Perhaps having the handle as a parent object would fix this.

Secondly, the doors don't have a closed state. It would be good, if you would only turn the handle if the door was in a closed position and it could stop swinging when it reaches closed state if you want to close this. Such an implementation would also be a stepping stone to having some doors locked and be openable though this would need additional stepping stones to be implemented well.

Repository link: https://cgvrgit.ulno.net/cgvr/DeltaVR